Autoencoder Implementation on Tensorflow

Autoencoders are an unsupervised learning technique in which we leverage neural networks for the task of representation learning

With the new Tensorflow API, it has become super easy to implement Autoencoder as shown below

First we load the MNIST Fashion dataset as follows:

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import tensorflow as tf

from tensorflow.keras import layers, losses

from tensorflow.keras.datasets import fashion_mnist

from tensorflow.keras.models import Model

(x_train, _), (x_test, _) = fashion_mnist.load_data()

x_train = x_train.astype('float32') / 255.

x_test = x_test.astype('float32') / 255.

print (x_train.shape)

print (x_test.shape)We can simply code a Fully Connected Autoencoder as follows:

latent_dim = 64

class Autoencoder(Model):

def __init__(self, latent_dim):

super(Autoencoder, self).__init__()

self.latent_dim = latent_dim

self.encoder = tf.keras.Sequential([

layers.Flatten(),

layers.Dense(128,activation='relu'),

layers.Dense(latent_dim, activation='relu'),

])

self.decoder = tf.keras.Sequential([

layers.Dense(128,activation='relu') ,

layers.Dense(784, activation='sigmoid'),

layers.Reshape((28, 28))

])

def call(self, x):

encoded = self.encoder(x)

decoded = self.decoder(encoded)

return decoded

autoencoder = Autoencoder(latent_dim)Since autoencoder is a regression problem, we use the Mean Square Error to train the model as follows

autoencoder.compile(optimizer='adam', loss=losses.MeanSquaredError())

autoencoder.fit(x_train, x_train,

epochs=10,

shuffle=True,

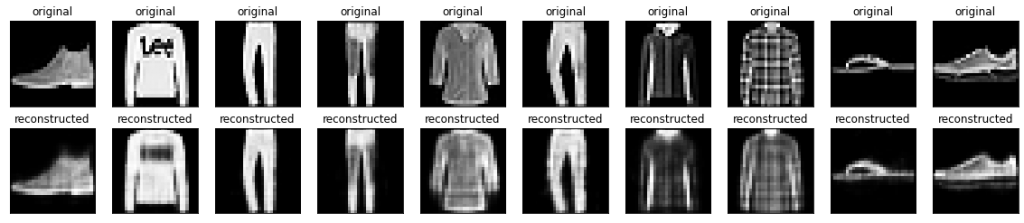

validation_data=(x_test, x_test))The result of the original and reconstructed images are shown below

References:

Relevant Courses

November 15, 2021